Many students use Discord to communicate with others, whether it be for gaming, talking with friends or school-related communications. With Discord’s mounting popularity, concerns about user safety have also increased.

One of Discord’s main qualities is the anonymity behind each user. Like most forms of social media, a person’s public profile only includes their username, their profile picture and their “About Me” section, all of which can be customized by the user. Such anonymity may have some advantages in being able to communicate without giving away private information, but it may also leave room for impersonation.

“It’s so easy to forge [an] identity,” said junior Iris Zhan. “It’s easy to manipulate people because there’s no security checks or real person checks.”

Additionally, content moderation on servers and in Direct Messages comes up as another issue surrounding Discord’s safety. Servers with many users are often overseen by a mix of human moderators and bots that filter text for harassment and inappropriate content. However, this moderation is not perfect.

“[Harassment is] not moderated at all,” said freshman Emma An. “I feel like nobody really cares about it. It’s just like an everyday thing.”

Direct Messages allow users to tell Discord how strictly they want their messages to be automatically scanned for explicit content. However, this functionality is not often used.

“I don’t know what filters are out there other than restricting [who] sends me a message, but I’m not really sure if the filters do much,” said senior Claire Yokota. “If I’m being honest, people can use them if they want to, but a lot of people just keep them off.”

Discord does offer two-factor authentication, creating a layer of additional security for users. However the moderation surrounding who can join a server is not perfect, allowing anyone to join a private server if the join link gets leaked. When servers are exposed to these raids, user safety can be in danger.

This imperfect moderation is evident in popular scams, especially for Discord Nitro, an enhancement users can buy to improve their Discord experiences with features such as animated emojis and higher file size limits. These scams bait users with the promise of free Discord Nitro, but upon clicking on the link hacks the user’s account and exposes the user’s entire friend list to the same scam to spread it further.

Discord, like any online platform, has risks which can only worsen with increased usage. Younger, more gullible users are especially susceptible to the dangers of online messaging. However, with careful moderation and practicing internet safety, users can talk, text and connect with people near and far on Discord without worrying too much about their online safety.

“I think any person who is … mature would know that [it] is a scam,” Zhan said. “But of course, little kids or people who are new to the Internet are very likely to get scammed.”

These safety concerns can come up as a larger issue when it comes to younger Discord users, who are generally more gullible and therefore more susceptible to scams. While Discord does not allow accounts for users under 13, people can easily lie about their ages. Additionally, Statista, a data analysis software portfolio, shows that Discord is predominantly more popular among younger adults ages 18-29 than in older adults in the age ranges of 30-39 and 40-49.

“I think that younger people should try to be more cautious on all social media platforms because … people could take advantage of them,” Yokota said.

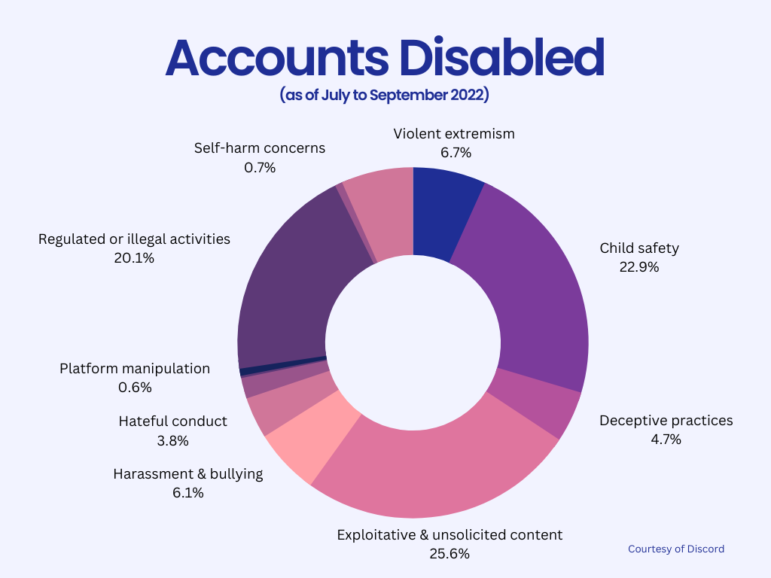

Despite these issues, Discord does make attempts to keep its platform safe. Every quarter, Discord publishes a Transparency Report in which they detail all the reports and the steps they have taken to ensure safety for all. From July to September 2022, Discord’s Transparency Report indicated a heavy focus on child safety; a large chunk of accounts disabled during this time frame were for child safety concerns. Discord even proactively takes action against servers and accounts that have been identified to be risking child safety. In fact, in the same time frame, Discord removed 14,451 servers associated with child safety concerns, 13,318 of which were proactive removals, meaning they were removed before anyone’s safety could be seriously risked.

Along with the Transparency Reports, Discord has also released a blog post that gives users tips on how to stay safe from scams and other risks when using the platform. While the existence of this blog does confirm that Discord safety issues are a very real problem, the fact that Discord is making an effort to protect users with this blog post is a step toward safety.