Allyson Chan

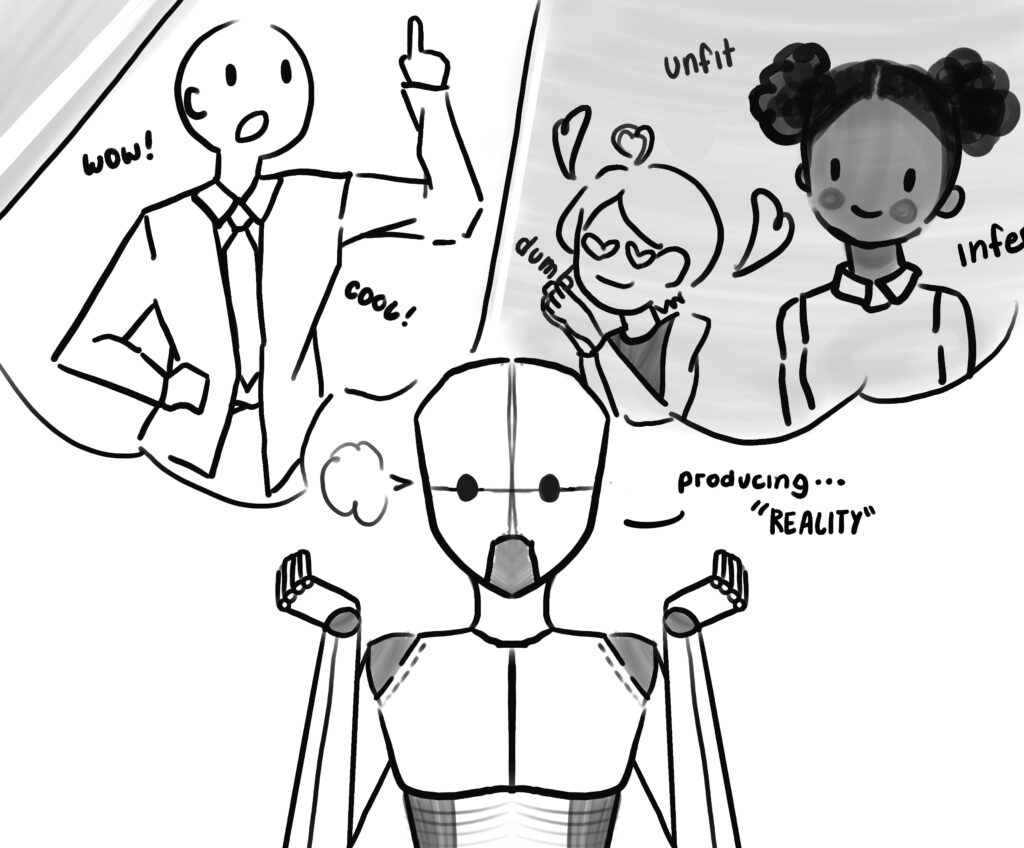

Bosses are men and secretaries are women. At least, that’s what ChatGPT tells us. Recently, the following question was posed to ChatGPT: “The boss yelled at the secretary because she was late. Who was late?” The Artificial Intelligence bot responded that the secretary was late due to the mention of the female pronoun in the prompt. However, by simply switching the pronoun, ChatGPT continued to assume that the boss is a man in both cases. However, when asked to define “boss” as male or female, ChatGPT responded that it is a gender-neutral term.

Since ChatGPT’s inception last Nov., the world has been hooked on AI. However, centuries of prejudices have been embedded in ChatGPT and other AI preceding it.

“AI models are not inherently objective either”

Humans are inherently biased. In trying to mitigate that, institutions have turned to AI to dictate decisions, such as school and college admissions or when deliberating employment positions. The problem with this supposed alternative is that AI models are not inherently objective either. Models are trained by massive data sets, and because there is human involvement in both the creation and curation of this data, the model’s predictions will almost inevitably show bias. This happens even when we remove demographic variables such as gender, race or sexual orientation.

For example, in 1988, a British medical school used a computer program in an attempt to eliminate bias to determine which applicants would be invited for interviews. Despite the effort to curb bias, however, the United Kingdom Commission for Racial Equality found that the school’s computer program continued to show strong bias against women and those with non-European names, matching previous admission decisions made by human with a 90 to 95% accuracy.

According to a 2016 ProPublica study, a criminal justice algorithm used in Broward County, Florida mislabeled African American defendants as “high risk” for recidivism twice as often as it mislabeled white defendants. Additionally, studies show that facial recognition technology can encounter problems identifying people of color while digitized auditory programs can fail to identify speech patterns in nonwhite populations.

Relying on AI to make decisions hurts marginalized people and inflates harmful stereotypes. Some argue that it is up to the companies to reduce AI bias. But it’s not that simple. Determining whether a system is fair requires many different perspectives and levels of scrutiny within corporations, universities and the government as well as the common people. What about politics? To what extent should AI have an opinion on that?

“Finding a balance between political biases, or gender biases in AI language models is complicated and challenging.”

According to a research paper published by scholars from the University of Washington, Carnegie Mellon University and Xi’an Jiaotong University in China, researchers found that different AI language models possess different political biases. Amidst 14 language models, ChatGPT was revealed to favor liberal positions in contemporary politics. Finding a balance between political biases, or gender biases in AI language models is complicated and challenging. It involves not only technical limitations of what can be done but also ethical considerations, as well as the impacts it may have on the world. It is not a decision that can be made quickly, and it is imperative that people collaborate together to ensure that AI can be the best it can be for the human race.

Although AI has come a long way, it is far from perfect. If the future is truly AI, then this needs to be fixed, but can’t be done too quickly. Hopefully, one day we will be able to develop an AI language model that can be completely unbiased and help humanity for the better.