Like a tomato splattering into pellets of seeds and chunks of flesh, the introduction of ChatGPT has left a pungent yet tangy splash on the Internet. The presence of generative artificial intelligence, or algorithms that produce media or text based on a user prompt, has expanded to platforms like Spotify, Photoshop, Snapchat and even Khan Academy. With so many platforms choosing to employ generative AI, it is worth investigating whether they are effective enough to warrant their future presence.

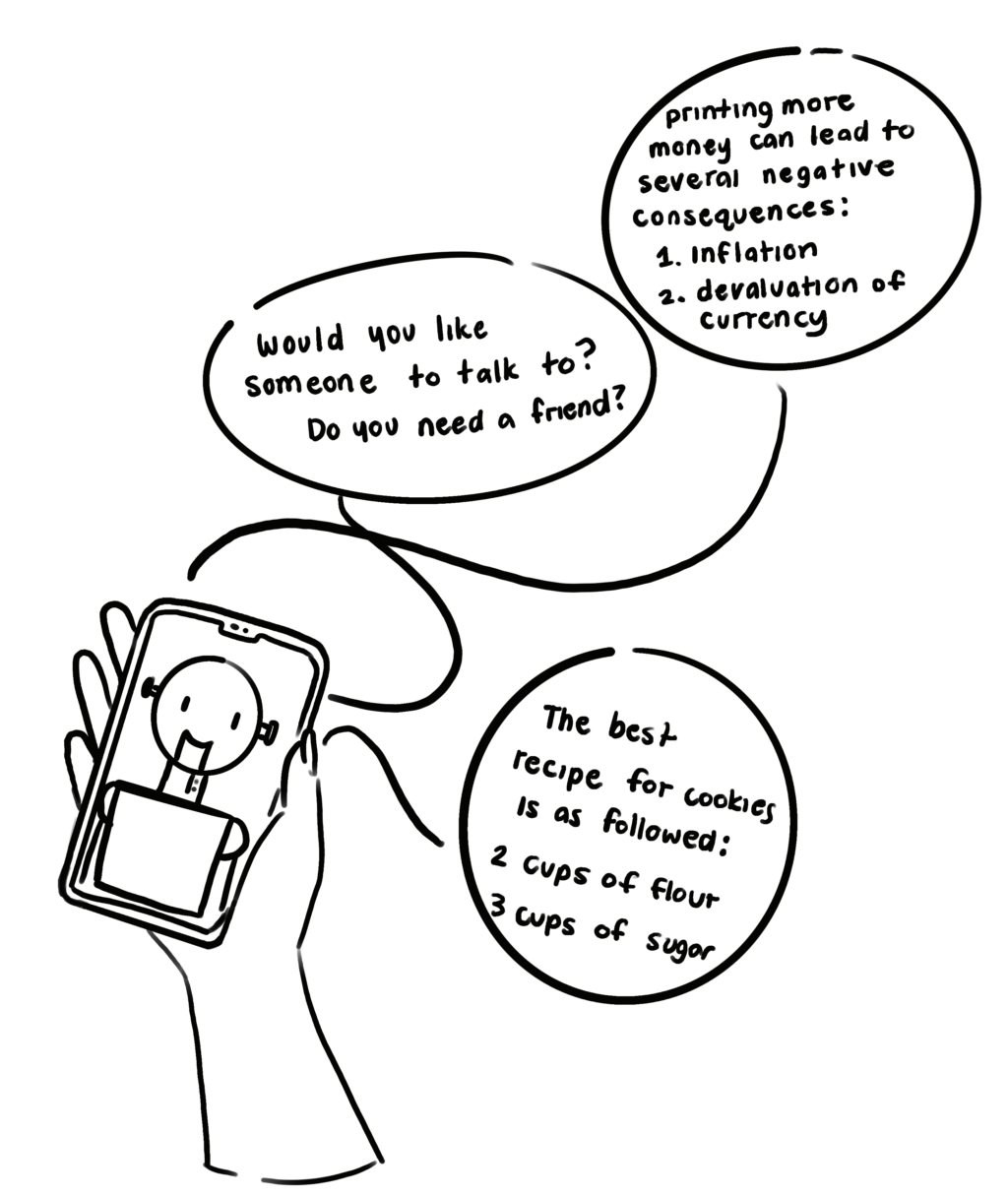

The Snapchat “My AI” feature lurks at the top of 800 million users’ friends list. It is a well-known example of a poorly executed chatbot or a computer program that simulates human conversations with users.

“I’d rather talk to my real friends,” said sophomore Kallan Tu. “Sometimes [people] consult their [My AI] as therapists … [basically] talking to them as a joke. What are you supposed to use it for?”

AI implementations in educational situations are also littered with flaws, such as providing inaccurate answers to questions or being easily manipulated into acting contrary to their developers’ intentions: a process called jailbreaking.

“I was trying to get my Quizlet AI to tell me a story about ducks eating cheese, but it didn’t work because it wanted to tell me about the vocab words I was supposed to be learning,” Tu said. “But then I was able to get it slowly to go away from the vocab words and it actually told me a story about ducks eating cheese, it gave up asking me about vocab.”

I was able to get it slowly to go away from the vocab words and it actually told me a story about ducks eating cheese, it gave up asking me about vocab

Teachers are becoming increasingly more wary of AI usage, turning to tools such as the Turnitin AI-writing detector to eliminate unethical work. However, this may only be targeting the symptom of a larger issue rather than the cause.

“Banning [AI] is also incorrect,” said senior Aether Hua. “If you’re going to get a job, you’re not going to be replaced by AI itself, you’re going to be replaced by someone who knows how to use AI better than you. It’s [incorrect] to tell kids to not use AI because the genie is out of the bottle, you can’t put it back in so you have to learn how to use it … At the same time, when kids use it, they use it for cheating on tests [and] writing essays.”

A few classes embrace the idea of using AI as a tool, such as digital photography.

“At the start of the year we were learning how to use the spot-fixing tools [in Photoshop],” said sophomore Tory Maciel. “For example, … there was this backyard and it had trash in it. We had to use a tool … to fill it in to [make the picture] look like it doesn’t have trash. If I was trying to do something whimsical or whatnot, I would use [this tool on my own].”

Yet, creatives largely have not had an amiable relationship with generative AI. Artists worldwide have felt cheated regarding the introduction of AI art generators because they used copyrighted datasets to train their models. A consequence is that some artists feel pressure to justify why human art cannot be replaced by AI.

Only a human can know when to adjust the frame rate

“I watched this animation analysis of this fight scene,” said freshman Remy Chow. “The entire fight scene is on twos which means each picture is held for two frames … But in the critical hit … it’s actually on threes to emphasize the hit. Only a human can know when to adjust the frame rate.”

The widespread use of AI will continue sparking changes in how creativity is defined, work ownership, and the value of convenience. It is up to companies, consumers, and public policy to guide AI’s growth in conjunction with humanity’s.