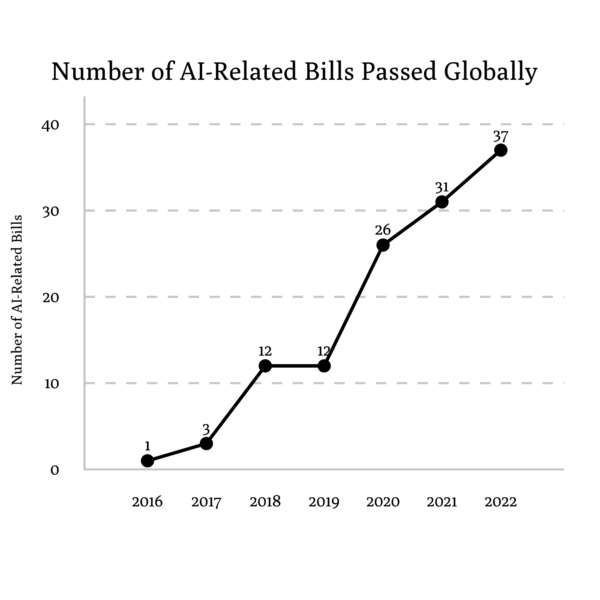

Source: 2023 AI Index Report

On Sept. 29, California Gov. Gavin Newsom vetoed Senate Bill 1047, or the Safe and Secure Innovation for Frontier Artificial Intelligence Models Act.

This bill would have established state authority to oversee the development and usage of large AI models that require over $100 million to build. These regulations included written safety protocols, the capability to enact a kill switch and annual examination reports by third-party auditors.

Additionally, the bill provided employee whistleblower protections and could prohibit models from being publicly available if they posed a risk to public safety. It also handed exclusive state authority to bring civil action to developers for violations of the bill.

“It would have forced developers to work around a lot of [constraints] and make it harder for them to develop this software,” said freshman Gabriella Pate. “But it would also make it safer to the people and the general public, which is our main priority and concern.”

Some think current laws on AI do not prevent it from developing at the expense of public safety, which Newsom defined as “threats to our democratic process, the spread of misinformation and deepfakes, risks to online privacy, threats to critical infrastructure and disruptions in the workforce,” in his letter to the California State Senate.

“Tech companies [have] made us the product,” said Kris Reiss, Compressed Math 2 and Computer Science teacher. “They mine all of our behavior, our clicks, how long we look at a friend’s picture … AI is no different. If you think what you type into AI isn’t being stored for later use, I would ask you to think again. AI companies don’t have your back. They’re not here for a better, brighter future.”

As a result, many believe AI requires more legislation.

“[Because] lawmaking is so much slower than actual technological development, we aren’t keeping up [with AI],” said junior Hayden Ha. “California should be leading the nation in this sort of thing because I’ve seen way too much AI in politics and … misleading images online and it’s important to regulate that.”

Newsom also addressed these issues in his letter, writing that the bill did not base its arguments on enough analysis of AI model capabilities and that its strict imposition of regulations would hinder companies. Students also recognized issues concerning the bill.

“[Although] I took the stance [that] it would be better if we had more protections, I understand why it wasn’t passed,” Ma said. “Given how important California is to AI’s development in the world, we wouldn’t want to slow it down because then that could have a ripple effect, and … we don’t want to waste time.”

Others were cautious about who supported the bill.

“[It’s] interesting how Elon Musk backs it, considering how his Twitter platform is breeding off of misinformation right now,” Ha said. “And that’s something we should take into consideration when doing this sort of thing.”

Some students believe minor adjustments would be sufficient for the bill’s approval.

“[Instead of shutting the model] down if it doesn’t do its intended purpose … just restructure it in the right direction,” said sophomore Sophia Tiu. “I feel like AI will be the future … so we shouldn’t inhibit it completely, but [simply] let it grow in a controlled manner.”

With the veto of SB-1047, there is no existing legislative framework to monitor the development of AI in California yet.